Full stack web development can be daunting to get into until you reach the point where your development environment starts to feel like home. Docker has been the single most important tool for me to get to that point. Whenever I want to create a project that involves a web server and a database, I start with a Dockerfile that lets me spin up a test environment on the fly on my own desktop.

Docker has enabled ordinary developers to setup virtual environments without being industry specialists. In the dark times before docker, I tried to install everything on my Windows desktop to test a web application. That worked so poorly I started deploying to the server hosting my site to test there instead. At my first job, we had over thirty developers sharing the same test environment, all sharing the same database and deploying our code changes on top of each other. I experienced the benefits of Docker when my office adopted it and I quickly started tinkering with it at home.

I want to show off my Docker setup because I spent a lot of time making a better Dockerfile than the ones I found online. This is how simple setting up docker for a full stack project can be.

But first, you need to install Docker Desktop on your machine.

You will need Docker Desktop to be running before you can use the docker commands.

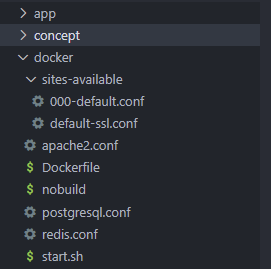

I have put all my code that would have been in the root of the project into an app folder. This lives next to a docker folder containing of the files necessary to get a test environment running in Docker Desktop. Ignore the concept folder which is probably full of flow charts and chicken scratch.

The meat of this is the Dockerfile itself. This file is the blueprint for the image you are creating. I like to make my project's Dockerfile start from a recent version of Ubuntu and install everything I need. This creates an environment that mimics what the production environment will look like.

I'm going to go through the Dockerfile first one piece at a time. It took me some time to get a Dockerfile working the way I needed it to.

FROM ubuntu:18.04

# define environment variables

ENV POSTGRES_VERSION=12

ENV PYTHONPATH=/app/python

First, I use FROM to tell Docker to start from the version of Ubuntu I want. Then I use ENV to set any environment variables I need.

# install packages

RUN apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get install -y language-pack-en-base

RUN export LC_ALL=en_US.UTF-8

RUN export LANG=en_US.UTF-8

RUN apt-get update

RUN DEBIAN_FRONTEND=noninteractive LC_ALL=en_US.UTF-8 apt-get install -y software-properties-common sudo

RUN apt-get update --fix-missing

RUN apt-get -y upgrade

RUN apt-get autoremove

RUN DEBIAN_FRONTEND=noninteractive LC_ALL=en_US.UTF-8 apt-get install -y --no-install-recommends \

supervisor \

apache2 \

vim

RUN apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-keys B97B0AFCAA1A47F044F244A07FCC7D46ACCC4CF8

RUN sh -c 'echo "deb http://apt.postgresql.org/pub/repos/apt/ `lsb_release -cs`-pgdg main" >> /etc/apt/sources.list.d/pgdg.list'

RUN apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get -y install postgresql-$POSTGRES_VERSION \

postgresql-client-$POSTGRES_VERSION \

postgresql-contrib-$POSTGRES_VERSION

RUN apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get -y install xclip

RUN apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get -y install cron

RUN apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get -y install python3.6

RUN apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get -y install python3-pip

RUN apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get -y install libcurl4-openssl-dev libssl-dev

RUN apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get -y install nodejs

RUN apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get -y install libssl1.0-dev

RUN apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get -y install nodejs-dev

RUN apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get -y install node-gyp

RUN apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get -y install npm

RUN apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get -y install curl

RUN apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get -y install net-tools

RUN curl -sL https://deb.nodesource.com/setup_14.x -o setup_14.sh

RUN sh ./setup_14.sh

RUN apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get -y install nodejs

RUN apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get -y install chromium-browser chromium-chromedriver snapd squashfs-tools

RUN apt-get clean

This chunk is a bit big and heavyhanded, but I'm installing every necessary package from apt and forcing it to install without needin any user interaction. For the most part, I just needed to alternate update and install commands and clean at the end. The other strange bits in here were solutions to issues I encountered along the way. Sometimes I needed to pull in another repository or add extra pieces. For whatever reason, installing software-properties-common wasn't working until I followed it with update --fix-missing, upgrade and autoremove. I'm not touching it again because that was the biggest pain and it works now.

# deploy apache2 config

COPY apache2.conf /etc/apache2/apache2.conf

# configure apache2

RUN a2enmod headers

RUN a2enmod expires

RUN a2enmod rewrite

RUN a2enmod ssl

RUN a2enmod proxy

RUN a2enmod proxy_http

RUN a2enmod proxy_balancer

RUN a2enmod lbmethod_byrequests

ADD sites-available /etc/apache2/sites-available

Next I set up apache2. I have a conf file to replace the default with. I'm enabling all the modules I need. I'm also copying all of my site configurations. Copying over a custom apache2.conf file is optional and can be commented out. Whenever I want to change things in conf files in my docker, this is how I prefer to handle it: I have the modified copy of the file and replace it after installing packages. I have also seen people replace text in a file in their Dockerfile. I personally found that method a bit more difficult to manage since it was hard to see the context of the changes being made.

# deploy postgres configuration

COPY postgresql.conf /etc/postgresql/$POSTGRES_VERSION/main/postgresql.conf

RUN echo "host all all 0.0.0.0/0 md5" >> /etc/postgresql/$POSTGRES_VERSION/main/pg_hba.conf

I make some small changes to the postgresql configuration by copying over my own files. I ended up making a couple of changes to postgresql.conf that other projects are unlikely to need. You can comment out this line and only start doing thing kind of thing when the need arises. For pg_hba.conf, the one thing you will likely run into is needing to append a specific line to the end of the file to connect via software like DBeaver. That's what the echo command above is doing.

# install npm packages

RUN npm install -g pm2

RUN npm install -g pg

RUN npm install -g cors

RUN npm install -g express

RUN npm install -g memory-cache

RUN npm install -g node-cache

RUN npm install -g express-basic-auth

RUN npm install -g express-session

RUN npm install -g passport

RUN npm install -g passport-local

RUN npm install -g passport-one-session-per-user

RUN npm install -g rate-limiter-flexible

RUN npm install -g connect-ensure-login

RUN npm install -g nodemon

RUN npm install -g ts-node

RUN npm install -g jest

RUN npm install -g supertest

RUN npm install -g ts-jest

I install npm package I need

# install python packages

RUN pip3 install psycopg2

RUN pip3 install feedparser

RUN pip3 install beautifulsoup4

RUN pip3 install selenium

RUN pip3 install random_user_agent

RUN pip3 install css_html_js_minify

RUN pip3 install slimit

I install every Python library I need

# application folder

RUN mkdir -p /app && rm -fr /var/www/html && ln -s /app /var/www/html

I setup an /app directory which I'm going to dump my project into.

# start.sh script is the next step

ADD start.sh /start.sh

RUN chmod 755 /*.sh

EXPOSE 80 5432

CMD ["/start.sh"]

The last step is to copy a startup script, expose the ports for apache and psql, then have start.sh run when the Docker container starts.

This is my startup script right now:

#!/bin/bash

# start apache2

echo "------------------------------------------------------------------"

echo "|"

echo "| I am starting apache2"

echo "|"

echo "------------------------------------------------------------------"

cd /etc/apache2/sites-available/

for FILE in *; do

a2ensite $FILE

done

service apache2 start

# start postgres

POSTGRESQL_BIN=/usr/lib/postgresql/12/bin/postgres

POSTGRESQL_CONFIG_FILE=/etc/postgresql/12/main/postgresql.conf

POSTGRESQL_DATA=/var/lib/postgresql/12/main

POSTGRESQL_SINGLE="sudo -u postgres $POSTGRESQL_BIN --single --config-file=$POSTGRESQL_CONFIG_FILE"

# If there is no postgresql data directory, create the directory and set config data path

if [ ! -d $POSTGRESQL_DATA ]; then

mkdir -p $POSTGRESQL_DATA

chown -R postgres:postgres $POSTGRESQL_DATA

sudo -u postgres /usr/lib/postgresql/9.3/bin/initdb -D $POSTGRESQL_DATA

ln -s /etc/ssl/certs/ssl-cert-snakeoil.pem $POSTGRESQL_DATA/server.crt

ln -s /etc/ssl/private/ssl-cert-snakeoil.key $POSTGRESQL_DATA/server.key

fi

# Setting the default password

$POSTGRESQL_SINGLE <<< "ALTER USER postgres WITH PASSWORD 'postgres';" > /dev/null

# Starting the postgresql server

echo "------------------------------------------------------------------"

echo "|"

echo "| I am starting postgres"

echo "|"

echo "------------------------------------------------------------------"

nohup sudo -u postgres $POSTGRESQL_BIN --config-file=$POSTGRESQL_CONFIG_FILE &

# building the postgresql server

sleep 5s

cd /app/sql

echo "------------------------------------------------------------------"

echo "|"

echo "| I am building postgres"

echo "|"

echo "------------------------------------------------------------------"

./sqlbuild.sh

# start nodejs with pm2

cd /app/site/server/

echo "------------------------------------------------------------------"

echo "|"

echo "| I am starting Node.js"

echo "|"

echo "------------------------------------------------------------------"

pm2 start ecosystem.config.js

# this keeps to docker instance going and displays pm2 logs where I can see them faster

echo "------------------------------------------------------------------"

echo "|"

echo "| I am switching to printing pm2 logs"

echo "|"

echo "------------------------------------------------------------------"

pm2 logs

The big echo statements are to make it easier for me to read when I look at the logs view in Docker Desktop.

First apache2 and Postgres need to be running. I use a loop to be able to enable whatever site configurations I made without having to edit this file when file names change. The code to run the database is painfully messy. I might trim it down one day but for now its one of those magical pieces of code that finally just works and everything breaks whenever its touched.

After getting the database running, I can build it. For that, I have another script that creates and populates tables and everything else the database needs. That is something I may dive into another day.

The last step is to start up Node.js and the start printing its logs. Note that this script needs to continue running because it is the Docker's starting point and the container will die if this process ends. That's a bit hackier than I would like it to be and there are certainly more robust solutions, but this is an environment for testing. It needs to be stable enough for a developer to test on, not for end users to beat up. I would rather having something this simple so I can spend less time maintaining my own testing environment. But if you hate yourself, you can bring in Supervisor to manage processes.

There is another important component here which is my startdocker script which exists right outside of the docker directory and looks something like this:

#!/bin/bash

cd docker

latestfile=$(find . -type f -printf '%T@ %p\n' | sort -n | tail -1 | cut -f2- -d" ")

# Adam likes to make a nobuild file to decide when the docker is already built. It will not be the latest file once Dockerfile or a conf file changes

if [[ "${latestfile}" == "./nobuild" ]] ; then

echo "skipping docker build"

else

echo "kicking off docker build"

docker build -t docker-myprojectname .

if [ $? != 0 ] # if there was an error, end deployment

then

exit

fi

touch nobuild

fi

echo "Me: Docker NO"

docker container rm --force myprojectname

echo "Docker: Docker YES"

cd ../

docker run --name myprojectname -d -p 80:80 -p 5432:5432 --mount type=bind,source=$PWD/app,target=/app -e OVERRIDE=true docker-myprojectname

echo "Completed at ".`date`

The "docker build" command essentially takes the blueprints you have written and builds the disk image. After running this command, you now have your virtual Ubuntu machine and it is basically turned off.

I did this silly thing where I decided that the "docker build" command could be followed by touching an arbitrary file. Whenever that file is the latest file in the docker directory, it means there's no need to rebuild. This way I can run the same script mindlessly whenever I want to restart Docker, whether changes were made or not, and it knows whether I'm wanting it to rebuild the Docker container. That part is not necessary at all. It is just a product of my laziness, but it works so nicely.

I am running "docker container rm" to force the container to shut down before "docker run" starts it up again. This effectively restarts it or turns it on depending on whether it is currently running. Again, my laziness.

Echoing the time at the end is there for my sanity so I can look at my terminal and remmeber when I last started it. I just want to point out that it wasn't added for nothing.

The "docker run" command is complicated but well documented. We give it the container name, the ports we want to have access to and we bind the /app folder in the project to the /app folder on the image. Note that apache2 likes to use port 80 and Postgres likes to use port 5432.

This script is not actually necessary. You could run these commands yourself on a command line, but why type a lot when you can make typing a little bit work.

After running this, in the Docker Desktop window, you should see the light turn green on your running instance. You should be able to click on it to see the logs. The logs should end with your initial Node.js logs from pm2 showing you whether your web server is running or if an npm package is not properly installed. You should be able to connect to the database on localhost:5432 and to your site in a browser at localhost:80. Of course, you still have to build the rest of the architecture but it shouldn't take much to make it work from here.

The best part is that as soon as you have multiple projects that want different versions of various software or run on completely different stacks, all you need to do is copy and edit the Dockerfile and surrounding scripts. You can run them at the same time without worrying about conficts, although you may need to adjust port numbers.